How Load Balancers Distribute Traffic

Imagine launching a new feature and suddenly your app gets flooded with users-maybe a YouTube shoutout, a Product Hunt launch, or a festive sale rush.

If all those requests hit a single server, things fall apart fast:

- Slow responses

- Crashed servers

- Angry users refreshing the page

This is where load balancers quietly save the day.

In this post, we’ll break down:

- What a load balancer actually does

- How it distributes traffic

- The most common algorithms it uses

- What happens when servers fail

- Why every scalable system depends on it

No buzzwords. No fluff. Just how it really works.

What Is a Load Balancer?

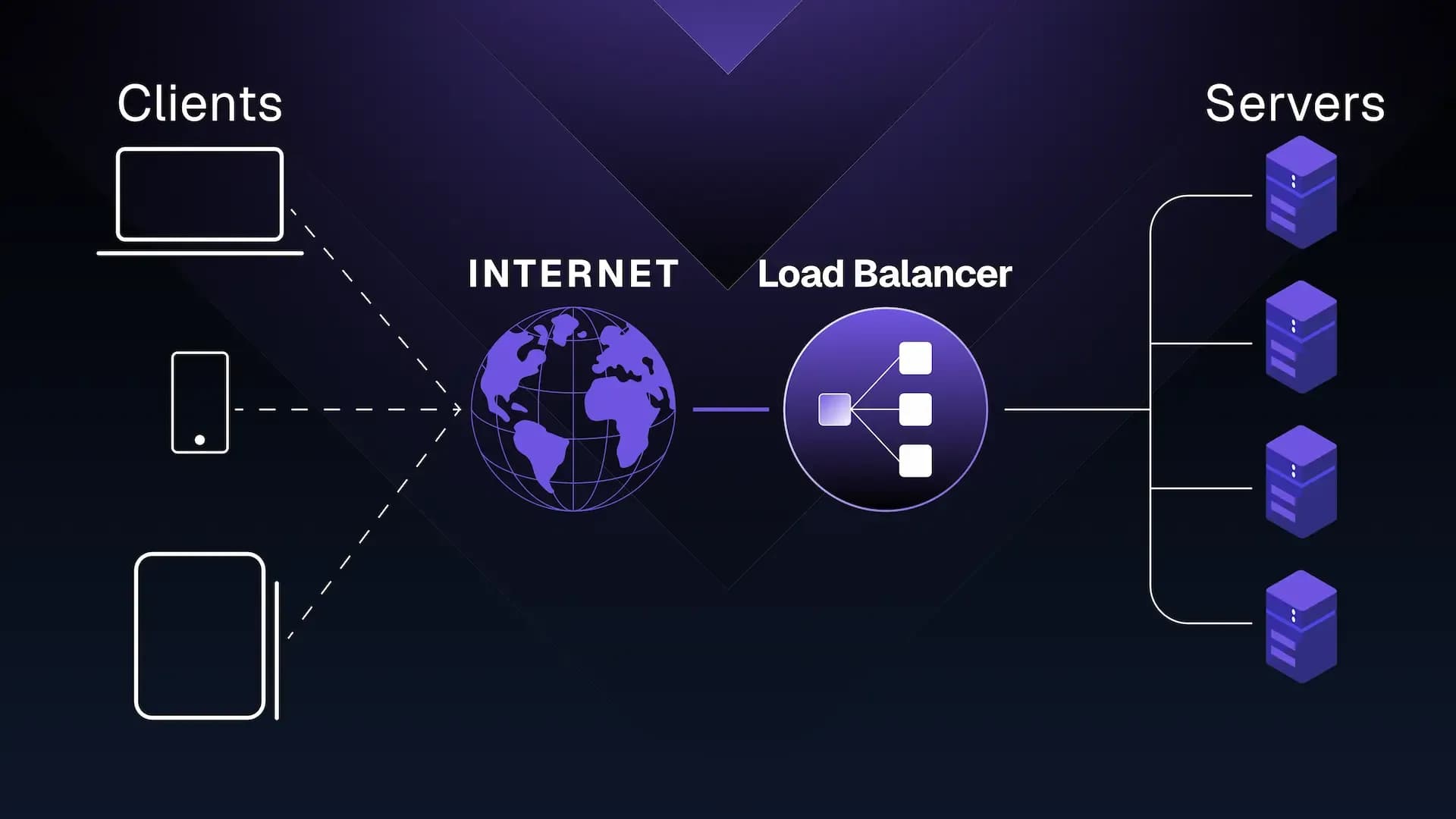

A load balancer sits between users and your backend servers.

Instead of users directly talking to your app servers, they talk to the load balancer. The load balancer then decides:

“Which server should handle this request?”

Think of it like a traffic police officer at a busy junction-directing vehicles so no single road gets jammed.

Simple flow:

1User → Load Balancer → Backend Server

From the outside, users see one application.

Behind the scenes, traffic is being carefully distributed.

Why Load Balancers Exist

Without a load balancer:

- One server becomes a single point of failure

- Scaling means manual DNS changes

- Downtime is almost guaranteed

With a load balancer:

- Traffic is evenly spread

- Servers can be added or removed safely

- Failures are handled automatically

This is why load balancers are foundational infrastructure, not an “advanced optimization”.

How Traffic Reaches the Load Balancer

Here’s what actually happens when a user opens your app:

- The user enters your domain (e.g.,

app.example.com) - DNS points the domain to the load balancer’s IP

- Every request now hits the load balancer first

- The load balancer forwards the request to a backend server

- The response flows back through the load balancer to the user

From the user’s perspective-nothing looks different.

Behind the scenes-everything is controlled.

Common Traffic Distribution Strategies

1. Round Robin

Requests are sent sequentially:

1Server A → Server B → Server C → repeat

Pros

- Simple and predictable

- Works well when servers are identical

Cons

- Ignores real-time server load

Best for: small systems with evenly sized servers

2. Least Connections

The load balancer asks:

“Which server is handling the fewest active requests?”

Pros

- Adapts to uneven traffic

- Prevents overloaded servers

Best for: APIs and long-running requests

3. Weighted Distribution

Not all servers are equal.

Example:

- Server A (weight 3)

- Server B (weight 1)

Server A receives 3× more traffic.

Pros

- Ideal for mixed instance sizes

- Useful during gradual scaling

4. IP Hashing (Sticky Sessions)

Requests from the same user IP always go to the same server.

Pros

- Maintains session consistency

Cons

- Can create uneven load

Best for: legacy apps relying on in-memory sessions

Health Checks: The Secret Sauce

Load balancers don’t blindly forward traffic.

They constantly ask each server:

“Are you alive?”

This is done using health checks:

- Ping a specific endpoint (e.g.,

/health) - Expect a valid response

- Mark the server unhealthy if it fails

If a server goes down:

- Traffic is rerouted automatically

- Users never notice

- No manual intervention needed

This is how high-availability systems survive real-world failures.

What Happens When a Server Crashes?

Let’s say Server B suddenly dies:

- Health check fails

- Load balancer removes Server B from rotation

- Traffic continues to Server A and C

- When Server B recovers → it’s added back automatically

This is fault tolerance-one of the biggest advantages of load balancing.

Layer 4 vs Layer 7 Load Balancing

Layer 4 (Transport Layer)

- Operates on IP and port

- Extremely fast

- No awareness of request content

Example: TCP traffic routing

Layer 7 (Application Layer)

- Understands HTTP, headers, paths

- Can route based on:

- URLs

- Cookies

- Headers

Example:

1/api → API servers2/admin → Admin servers

Most modern web applications rely on Layer 7 load balancing.

Load Balancers and Scaling

Load balancers enable:

- Horizontal scaling (adding more servers)

- Zero-downtime deployments

- Rolling updates

You can:

- Add servers during peak traffic (sales, festivals, launches)

- Remove servers during low usage

- Deploy new versions gradually

All without users noticing anything.

A Real-World Setup

A typical production architecture looks like this:

1Users2 ↓3Load Balancer4 ↓5Multiple App Servers6 ↓7Database

When traffic grows:

- Add more app servers

- Load balancer distributes requests

- App keeps running smoothly

This is how products scale from 10 users to 10 million.

Why Developers Should Care

Even if you’re not a DevOps engineer:

- Load balancers affect performance

- They influence session handling

- They impact error rates

- They decide uptime

Understanding them helps you:

- Debug production issues faster

- Design scalable systems

- Make better architectural decisions

Final Thoughts

Load balancers are invisible when they work-and disastrous when missing.

They don’t:

- Magically make your app fast

- Fix inefficient code

But they protect your system from collapsing under pressure.

If your app has more than one server or plans to a load balancer isn’t optional. It’s essential.